Web scraping is a powerful tool for gathering data, but it's not without its challenges. Errors can and will occur, regardless of your level of expertise. Understanding and handling these errors is crucial for efficient data collection and analysis. This article provides a foundational guide for beginners to navigate through common web scraping errors.

Let's dive into the key points that will help you navigate through these challenges.

- Understand the Nature of Web Scraping Errors

- Identify Common Errors and Their Causes

- Learn Strategies to Prevent Web Scraping Errors

- Master the Art of Troubleshooting Web Scraping Errors

Understand the Nature of Web Scraping Errors

Web scraping errors are obstacles that every data enthusiast encounters. They can range from simple misconfigurations to complex site-specific issues. These errors often reveal the delicate nature of web scraping, where small changes can have significant impacts. Recognizing the common types of errors is the first step in mastering web scraping.

Identifying Common Errors and Their Causes

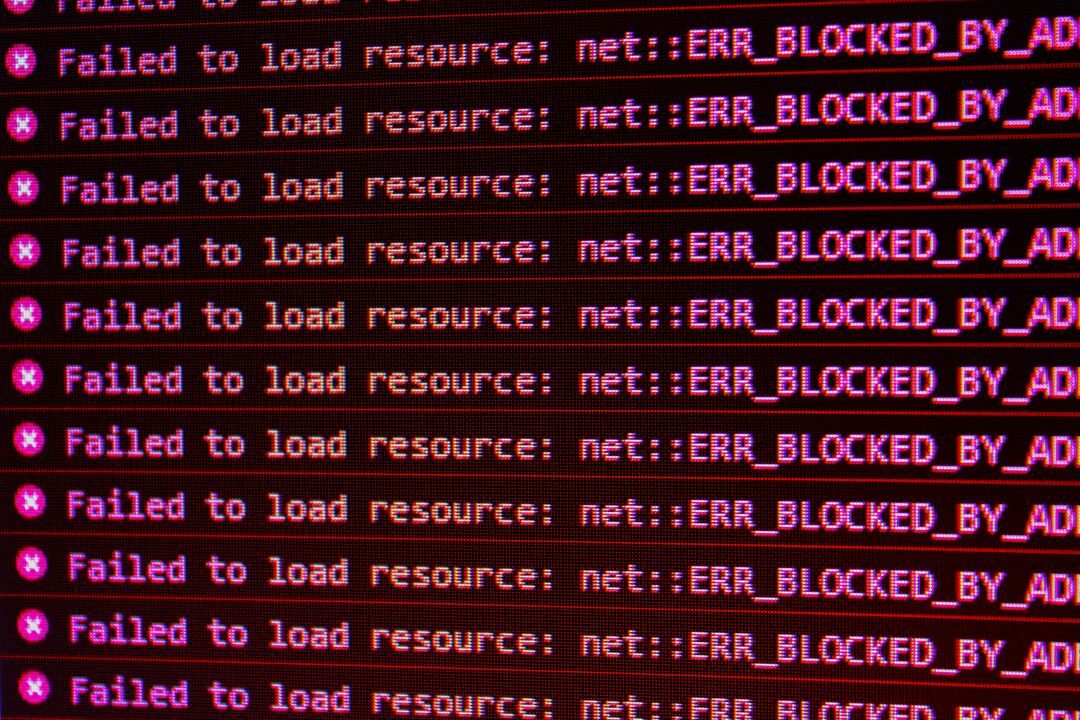

Errors like '404 Not Found' or '403 Forbidden' are common stumbling blocks. Parsing errors occur when a website's structure changes unexpectedly. Frequent and rapid requests can lead to IP bans, while data format errors arise from inconsistencies in the extracted data. Understanding these causes is the first step in effective error handling.

Common Errors Include:

- HTTP errors like 404 Not Found or 403 Forbidden.

- Parsing errors due to changes in the website structure.

- IP bans resulting from making too many requests too quickly.

- Data format errors when the scraped data doesn't match expected patterns.

Understanding HTTP Errors in Web Scraping

When performing web scraping, HTTP errors are among the most frequent issues you’ll encounter. These errors are status codes returned by the server in response to your requests, indicating that something went wrong. Understanding these errors is crucial for diagnosing and troubleshooting your scraping scripts. Here’s a deeper dive into some common HTTP errors:

1. 404 Not Found

What It Means: The server could not find the requested resource. This typically occurs when the URL is incorrect or the resource has been moved or deleted.

Common Causes:

- Incorrect URL: A typo in the URL or an outdated link.

- Page Moved: The webpage has been moved to a different URL without proper redirection.

- Dynamic URLs: The URL may change based on user sessions or other dynamic content.

How to Handle:

- Check and Correct URLs: Verify the URLs you are scraping to ensure they are correct.

- Implement URL Validation: Before making a request, validate the URL format.

- Monitor Changes: Regularly check for changes in the website structure and update your scraping scripts accordingly.

2. 403 Forbidden

What It Means: The server understands the request but refuses to authorize it. This can happen if the server blocks access to certain resources.

Common Causes:

- IP Blocking: Your IP address might be blocked due to frequent requests.

- User-Agent Blocking: The server might block requests from non-browser User-Agents.

- Access Restrictions: The content might be restricted to logged-in users or specific regions.

How to Handle:

- Rotate IPs: Use proxy servers to rotate IP addresses and avoid bans.

- Set User-Agent Headers: Use a browser-like User-Agent string in your requests.

- Handle Authentication: If the content requires authentication, ensure you handle login procedures correctly.

3. 500 Internal Server Error

What It Means: The server encountered an unexpected condition that prevented it from fulfilling the request. This is usually a problem on the server side.

Common Causes:

- Server Overload: The server is experiencing high traffic or resource issues.

- Bugs in Server Code: There might be bugs or issues in the server’s code.

- Temporary Issues: The server might be undergoing maintenance or facing temporary problems.

How to Handle:

- Retry Mechanism: Implement a retry mechanism with exponential backoff to try the request again after some time.

- Notify Administrator: If the issue persists, consider notifying the website’s administrator (if appropriate).

4. 429 Too Many Requests

What It Means: The user has sent too many requests in a given amount of time, and the server is throttling them to prevent abuse.

Common Causes:

- High Request Frequency: Sending requests too rapidly.

- Rate Limits: The server imposes rate limits on the number of requests.

How to Handle:

- Respect Rate Limits: Adhere to the rate limits specified by the server.

- Implement Delays: Introduce delays between requests to avoid triggering rate limits.

- Use Rotating Proxies: Distribute requests across multiple IP addresses to reduce the load on any single IP.

5. 503 Service Unavailable

What It Means: The server is not ready to handle the request, often due to being overloaded or down for maintenance.

Common Causes:

- Server Maintenance: The server is temporarily down for maintenance.

- High Traffic: The server is experiencing high traffic and cannot handle additional requests.

How to Handle:

- Retry with Backoff: Implement a retry mechanism with exponential backoff.

- Monitor Server Status: Check the server status and try again later if the server is down.

General Tips for Handling HTTP Errors

Log Errors: Keep detailed logs of the errors encountered to identify patterns and recurring issues.

Custom Error Handling: Implement custom error handling logic for different types of errors.

API Documentation: Refer to the API documentation (if available) for error handling guidelines and rate limit policies.

Use Robust Libraries: Utilize web scraping libraries like Scrapy or BeautifulSoup that offer built-in mechanisms for handling HTTP errors.

By understanding and effectively handling these HTTP errors, you can significantly improve the reliability and efficiency of your web scraping projects.

Understanding Parsing Errors in Web Scraping

Parsing errors occur when the web scraping script fails to correctly interpret the HTML structure of a webpage. These errors can lead to incomplete or incorrect data extraction, which undermines the effectiveness of your scraping efforts. Let’s delve into the causes, common scenarios, and strategies for handling parsing errors.

Causes of Parsing Errors

Changes in Website Structure: Websites frequently update their layout or HTML structure, which can break your scraping scripts.

Even minor changes, such as renaming a class or ID attribute, can cause parsing errors.

Dynamic Content: Websites that use JavaScript to load content dynamically may cause issues because the HTML received by your scraper may not contain the desired data.

This is common with single-page applications (SPAs) and sites using frameworks like React or Angular.

Inconsistent HTML Markup: Poorly structured or inconsistent HTML can lead to parsing difficulties.

Missing closing tags, malformed tags, or irregular nesting of elements can cause your parser to fail.

Incorrect CSS Selectors/XPath Expressions: Using incorrect or overly specific CSS selectors or XPath expressions can lead to parsing errors.

These selectors must match the exact structure of the HTML to extract the desired data.

Common Scenarios of Parsing Errors

Element Not Found: When the scraper searches for an HTML element that does not exist or has changed its identifier (e.g., ID or class).

Example: soup.find('div', {'class': 'product'}) returns None.

Incorrect Data Extraction: Extracted data is incomplete or does not match the expected format.

Example: Extracting a list of prices, but some prices are missing due to changes in HTML structure.

Parsing Libraries Limitations: Some parsing libraries may not fully support advanced or complex HTML structures, leading to incomplete parsing.

Example: BeautifulSoup might struggle with deeply nested or highly dynamic content.

Strategies to Prevent and Handle Parsing Errors

-

Use Robust Selectors

Opt for more resilient CSS selectors or XPath expressions that are less likely to break with minor changes.

Example: Instead of soup.find('div', {'class': 'product-name'}), use soup.select('div.product-name'). -

Regular Script Maintenance

Regularly update your scraping scripts to accommodate changes in website structure.

Automate the process of checking for HTML changes and alerting when updates are needed. -

Handle Dynamic Content

Use tools like Selenium or Puppeteer to render JavaScript and access dynamically loaded content.

Example: Use Selenium to navigate the website and wait for JavaScript to load the content before scraping.

Strategies to Prevent Web Scraping Errors

Preventing errors before they occur is an efficient strategy. By respecting a site's robots.txt and pacing your requests, you can avoid many common issues. Utilizing rotating proxies can also help prevent IP bans. These proactive steps save time and resources, making your scraping efforts more effective and less frustrating.

Preventive Measures Include:

- Adhering to the guidelines in robots.txt files.

- Implementing delays between consecutive requests.

- Using proxy servers to rotate IP addresses.

Master the Art of Troubleshooting Web Scraping Errors

When errors occur, knowing how to troubleshoot them quickly and effectively is crucial. Implementing retries with exponential backoff can mitigate HTTP errors, while regular script updates can help avoid parsing issues. Understanding and using these strategies can significantly reduce downtime and improve data quality.

Troubleshooting Strategies Include:

- Handling HTTP errors by implementing retries and backoffs.

- Regularly updating scripts to accommodate website changes.

- Using proxies and VPNs to overcome IP bans.

- Implementing data validation techniques to handle format errors.

Conclusion

Handling web scraping errors is a vital skill for any aspiring data professional. While it might seem daunting initially, with practice and the right strategies, you can significantly minimize these errors. Explore tools like BeautifulSoup and Scrapy, and continue learning to enhance your web scraping proficiency.

You should check us at DataHen, we specialise in custom web scraping services. Click the link here to get a quote on your next big deal.