Web scraping is a powerful tool for gathering data, but it's not without its challenges. Errors can and will occur, regardless of your level of expertise. Understanding and handling these errors is crucial for efficient data collection and analysis. This article provides a foundational guide for beginners to navigate through common web scraping errors.

Let's dive into the key points that will help you navigate through these challenges.

- Understand the Nature of Web Scraping Errors

- Identify Common Errors and Their Causes

- Learn Strategies to Prevent Web Scraping Errors

- Master the Art of Troubleshooting Web Scraping Errors

Understand the Nature of Web Scraping Errors

Web scraping errors are obstacles that every data enthusiast encounters. They can range from simple misconfigurations to complex site-specific issues. These errors often reveal the delicate nature of web scraping, where small changes can have significant impacts. Recognizing the common types of errors is the first step in mastering web scraping.

Identifying Common Errors and Their Causes

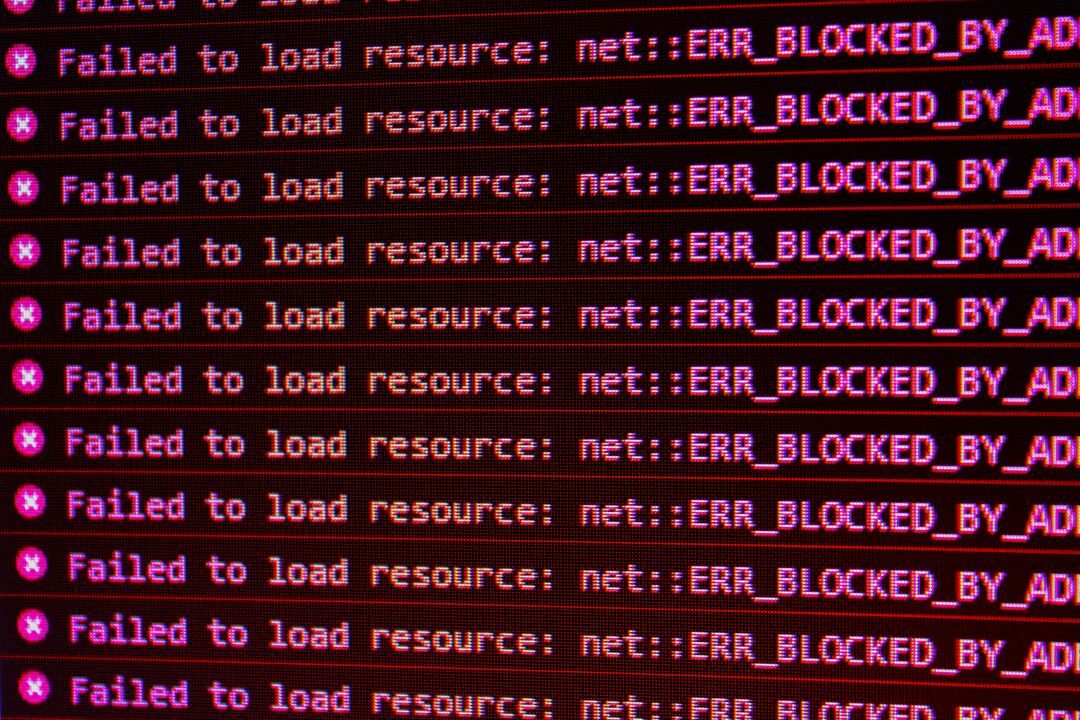

Errors like '404 Not Found' or '403 Forbidden' are common stumbling blocks. Parsing errors occur when a website's structure changes unexpectedly. Frequent and rapid requests can lead to IP bans, while data format errors arise from inconsistencies in the extracted data. Understanding these causes is the first step in effective error handling.

Common Errors Include:

- HTTP errors like 404 Not Found or 403 Forbidden.

- Parsing errors due to changes in the website structure.

- IP bans resulting from making too many requests too quickly.

- Data format errors when the scraped data doesn't match expected patterns.

Learn Strategies to Prevent Web Scraping Errors

Preventing errors before they occur is an efficient strategy. By respecting a site's robots.txt and pacing your requests, you can avoid many common issues. Utilizing rotating proxies can also help prevent IP bans. These proactive steps save time and resources, making your scraping efforts more effective and less frustrating.

Preventive Measures Include:

- Adhering to the guidelines in robots.txt files.

- Implementing delays between consecutive requests.

- Using proxy servers to rotate IP addresses.

Master the Art of Troubleshooting Web Scraping Errors

When errors occur, knowing how to troubleshoot them quickly and effectively is crucial. Implementing retries with exponential backoff can mitigate HTTP errors, while regular script updates can help avoid parsing issues. Understanding and using these strategies can significantly reduce downtime and improve data quality.

Troubleshooting Strategies Include:

- Handling HTTP errors by implementing retries and backoffs.

- Regularly updating scripts to accommodate website changes.

- Using proxies and VPNs to overcome IP bans.

- Implementing data validation techniques to handle format errors.

Conclusion

Handling web scraping errors is a vital skill for any aspiring data professional. While it might seem daunting initially, with practice and the right strategies, you can significantly minimize these errors. Explore tools like BeautifulSoup and Scrapy, and continue learning to enhance your web scraping proficiency.