Introduction

Web scraping has become an indispensable tool in today's digital world, enabling businesses and individuals to gather valuable data from websites for various purposes. From market research to competitive analysis and content aggregation, web scraping provides a wealth of information. However, this process comes with its own set of challenges, which can impede your efforts to collect data effectively. In this article, we will explore the common challenges faced during web scraping and introduce proxy rotation as a powerful solution to overcome these obstacles.

Use-Cases of Web Scraping

Web scraping involves using automated tools or scripts to access and collect information from web pages, and it has a wide range of applications in various fields.

Here's a more detailed look at the definition and use-cases of web scraping:

-

Data Extraction: Web scraping is primarily used to gather data from websites. This data can include text, images, product information, contact details, financial data, and much more. Essentially, any data that is publicly available on the internet can be harvested using web scraping.

-

Market Research: Businesses often use web scraping to monitor market trends, track competitors, and gather customer reviews and feedback. This information can be invaluable in making informed business decisions.

-

Price Monitoring: E-commerce companies frequently employ web scraping to monitor and compare prices of products across different websites. This allows them to adjust their pricing strategies to remain competitive. Learn how eCommerce brands use price monitoring to keep track of their competitiors.

-

Content Aggregation: News websites, content publishers, and bloggers use web scraping to aggregate content from various sources. This is useful for creating news feeds, content curation, and staying up-to-date with the latest information.

-

Lead Generation: Sales and marketing teams use web scraping to find potential leads and gather contact information. This enables them to target their sales efforts more effectively.

-

Real Estate and Property Data: Web scraping can be used to gather information about available properties, their prices, and location-specific data. This is valuable for both buyers and real estate professionals.

-

Academic Research: Researchers often scrape data from the internet to analyze trends, conduct surveys, or collect large datasets for academic purposes.

-

Weather and Environmental Data: Meteorologists and environmental scientists use web scraping to collect data from various sources to create accurate forecasts and monitor environmental changes.

If you are a python programmer trying to find the best python library for web scraping. Then, this article will help you make a decision with its in-depth knowledge. CLICK HERE.

Importance of Data in Decision-Making and Business Strategies

Data plays a pivotal role in decision-making and business strategies. In today's data-driven world, the ability to access and analyze relevant information is a competitive advantage.

Here's why data is so important:

-

Informed Decision-Making: Data provides businesses and individuals with the insights needed to make informed decisions. Whether it's choosing a marketing strategy, setting prices, or entering new markets, data-driven decisions are more likely to lead to success.

-

Competitive Analysis: Understanding your competitors' strategies and market positioning is crucial. Web scraping allows you to collect data on competitors, helping you identify strengths, weaknesses, and opportunities.

-

Market Research: Market dynamics are constantly changing. Data collected through web scraping helps businesses keep a finger on the pulse of their industry, enabling them to adapt to market shifts and stay competitive.

-

Customer Insights: Web scraping can provide valuable customer feedback, reviews, and sentiment analysis. This data is essential for tailoring products and services to meet customer expectations.

-

Efficiency and Automation: Automation of data collection through web scraping saves time and resources. It streamlines the process of gathering information, allowing businesses to focus on analysis and strategy.

Web scraping is a powerful tool that empowers individuals and organizations to harness the vast amounts of data available on the internet. This data, when properly collected and analyzed, becomes the cornerstone for informed decision-making and the development of effective business strategies. As the digital landscape continues to evolve, the importance of web scraping in acquiring, analyzing, and leveraging data is likely to grow, making it an essential skill and technology for various industries and applications.

Confused on what to build for your next web scraping project, this article about advanced web scraping project ideas will enlighten you to create the next big thing. CLICK HERE.

Common Challenges in Web Scraping

Web scraping, while a powerful tool for data extraction, often comes with a set of challenges that individuals and businesses need to overcome to ensure a successful and ethical scraping operation.

Here's a more in-depth exploration of these common challenges:

- IP Bans and Restrictions:

Challenge: Many websites employ measures to block or restrict access to their data when they detect an excessive number of requests from a single IP address.

Solution: To mitigate the risk of IP bans, users often employ proxy servers or rotate IP addresses. By distributing requests across different IPs, they can access the target website without triggering alarms. Check out this in-depth article by Bright Data.

- Rate Limiting:

Challenge: Websites impose rate limits to control the frequency of requests. Exceeding these limits can result in temporary or permanent bans.

Solution: Adhering to the rate limits is essential. Users can set scraping bots to slow down their requests, ensuring compliance with the website's policies. Check out this article to learn how rate limiting can be used to stop bot attacks.

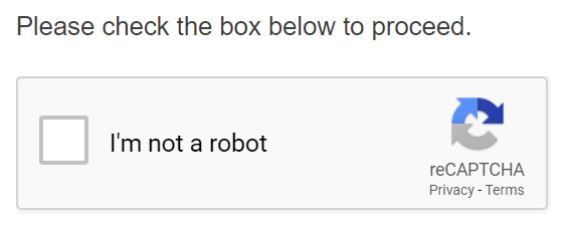

- CAPTCHAs and Other Anti-Bot Measures:

Challenge: Some websites employ CAPTCHAs, puzzles, or other anti-bot measures to verify that the user is a human, making it difficult for automated scraping bots to access data.

Solution: When faced with CAPTCHAs, users can employ CAPTCHA-solving services, which use human workers to solve these challenges. Additionally, advanced scraping tools incorporate CAPTCHA-solving mechanisms.

Want to understand how CAPTCHA technology is used to prevent bots from disrupting websites. Then read this article by AVG.

- Dynamic Content Rendering:

Click this link to learn more about Dynamic Content Rendering.

Challenge: Many modern websites use dynamic content loaded via JavaScript. This content can be challenging to scrape with traditional methods as it requires interacting with the web page as a user would.

Solution: Users can leverage headless browsers, such as Puppeteer or Selenium, to render and interact with dynamic content, making it accessible for scraping.

- Legal and Ethical Considerations:

Challenge: Ethical and legal considerations are critical in web scraping. Scraping without permission or scraping sensitive data can lead to legal repercussions and ethical concerns.

Solution: To address these concerns, users should always check a website's "robots.txt" file to understand their scraping policies. Obtaining explicit permission from website owners, focusing on public data, and respecting terms of service is crucial for ethical scraping practices.

With unclear laws and regulations in web scraping, OpenAI could potentially face multiple lawsuits. This article by Bloomberg Law goes into more detail.

- Data Consistency:

Challenge: Web pages can have inconsistent structures and data formats, making it challenging to extract clean and structured information.

Solution: Users need to develop robust scraping scripts that can handle variations in data presentation. Regularly testing and updating scripts can help maintain data consistency.

In conclusion, web scraping is a valuable tool for extracting data from the web, but it comes with its share of challenges. Overcoming these challenges requires a combination of technical skills, ethical considerations, and the use of appropriate tools and techniques. By understanding and addressing these common obstacles, individuals and businesses can harness the power of web scraping for various purposes while maintaining compliance with legal and ethical standards.

Elevate Your Data with DataHen! 🚀

Struggling with web scraping challenges? Let DataHen's expert solutions streamline your data extraction process. Tailored for small to medium businesses, our services empower data science teams to focus on insights, not data collection hurdles.

👉 Discover How DataHen Can Transform Your Data Journey!