Introduction

In an era where data is ubiquitously hailed as the new oil, understanding and ensuring the quality of this valuable asset is paramount, especially in large corporations like those in the Fortune 500. But what exactly is 'data quality', and why is it so crucial in today's business landscape?

What do you mean by Data Quality?

At its core, data quality refers to the suitability of data to serve its intended purpose. This encompasses aspects of data values such as accuracy, completeness, reliability, relevance, and timeliness. Good quality data should be free from errors, up-to-date, and directly applicable to the problems or decisions at hand.

What is Data Quality Process?

This is the data quality improvement process, of maintaining data integrity and usefulness throughout its lifecycle. Data quality control involves various practices and tools designed to identify, correct, and prevent errors in data. It’s a proactive approach, ensuring that data, as it is collected, processed, stored, and utilized, meets the stringent standards required for business operations.

What do you mean by Quality in the Context of Data?

Quality, in its broadest sense, refers to the degree of excellence of something. When applied to data, it translates to how well the data serves its purpose in decision-making, strategy formulation, and operations. Quality data is accurate, complete, consistent, timely, and relevant – each of these attributes contributes to the overall effectiveness of data-driven initiatives.

In a Fortune 500 company, where decisions can have far-reaching implications, ensuring data quality is not just a technical necessity but a strategic imperative. Therefore, becomes a foundational aspect of how these companies operate and succeed in the digital age.

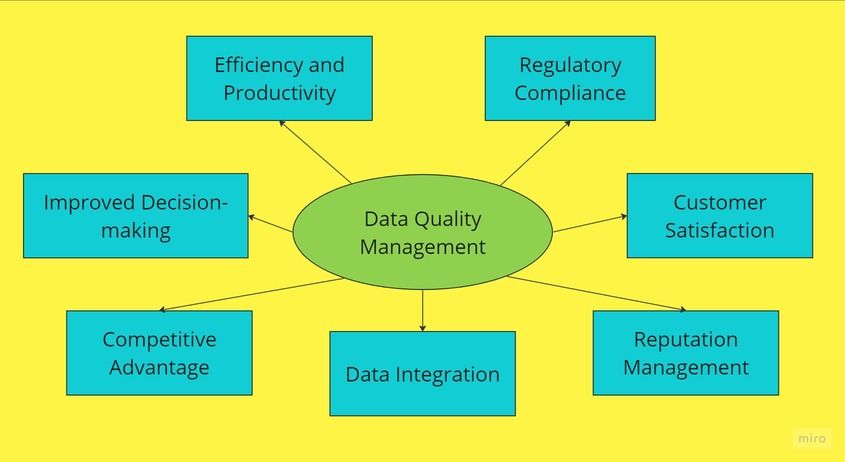

Why Do You Need Data Quality Management?

In today's fast-paced business environment, especially at a Fortune 500 company, data quality management (DQM) is not just a luxury, but a necessity. Here are the key reasons why:

Improved Decision-Making

At the heart of any major corporation, decision-making is driven by data. Quality data ensures that these decisions are based on accurate, reliable, and timely information. Poor data integrity, on the other hand, can lead to erroneous decisions that might cost the company dearly in terms of resources, reputation, and opportunities. Check out this article Harvard Business School publised about the effect of data-driven decision-making.

Efficiency and Productivity

High-quality data streamlines business processes. It reduces the time and resources spent on correcting errors and reconciling discrepancies, thereby enhancing operational efficiency. In a Fortune 500 company, where scale and complexity of operations are immense, even minor improvements in efficiency can lead to significant cost savings and productivity boosts.

Regulatory Compliance

Large corporations are often subject to stringent regulatory requirements. Accurate and quality data is essential to comply with legal and regulatory standards. Failure to measure data quality and to meet these standards can result in hefty fines and legal issues, not to mention the damage to the company’s reputation.

Customer Satisfaction

In an era where customer experience is paramount, maintaining high-quality data about customer interactions, preferences, and feedback is crucial. It enables the company to provide personalized services, respond promptly to customer needs, and build long-term customer relationships.

Competitive Advantage

In a Fortune 500 company, maintaining a competitive edge is about leveraging data effectively. High-quality data provides deeper insights, helps in identifying market trends, understanding customer behavior, and developing innovative solutions. It's a key differentiator in a market where companies vie for every inch of competitive advantage.

Data Integration

As large companies often deal with various data sources and systems, ensuring the quality of data becomes critical when integrating these disparate systems. Effective data quality management ensures that the integrated data is accurate, complete, and consistent, providing a unified view of the business.

Reputation Management

The quality of data directly impacts the company's reputation. Accurate data reflects the company's commitment to excellence and reliability, whereas poor or inconsistent data quality can tarnish the company's image and erode trust among stakeholders.

In summary, data quality management is an indispensable part of business strategy at a Fortune 500 company. It underpins every aspect of the business, from operational efficiency and regulatory compliance to customer satisfaction and competitive positioning. Investing in robust DQM practices is not just about managing data; it's about ensuring the success and sustainability of the business in a data-driven world.

Learn how to create a data quality management process with these steps. Click link HERE.

"Want to learm more about web scraping proxy? Check out this in-depth analysis."

Data quality management tools and techniques

In the realm of data quality management (DQM), a wide array of tools and techniques are employed, especially in large corporations like those in the Fortune 500, to ensure the integrity, accuracy, and usability of data. Below are some key tools and techniques used in DQM:

Data Profiling Tools

These tools are used to assess the quality of data by analyzing its content, structure, and consistency. Data profiling helps in identifying anomalies, inconsistencies, and patterns in the data, which are critical for understanding data usage and improving its quality.

Data Cleansing Software

Once data quality issues are identified, data cleansing tools are employed to determine data quality and correct errors and inconsistencies. These tools help in standardizing, de-duplicating, and validating data to ensure its accuracy and completeness.

Master Data Management (MDM) Systems

MDM solutions play a crucial role in ensuring consistency and control over master data entities like customer, product, and supplier data. They help in creating a single, authoritative view of these data entities across the enterprise.

Data Integration Tools

These tools assist in combining data from different sources, ensuring that the integrated data maintains its quality. They handle various tasks such as data extraction, transformation, and loading (ETL), which are essential for maintaining the quality of data in large-scale integrations.

Data Governance Frameworks

Data governance is essential for defining standards, policies, and procedures for data management. It involves setting up roles, responsibilities, and processes to ensure accountability and control over data assets. Learn more about data governance frameworks from Talend in this in-depth article.

Data Quality Metrics and Monitoring Tools

Implementing metrics and continuous monitoring is vital for maintaining long-term data quality. These tools help in tracking key data quality indicators like accuracy, completeness, consistency, and timeliness, providing insights into the health of data over time.

Advanced Analytical Techniques

With the advent of big data and AI, advanced analytical techniques such as machine learning algorithms are increasingly being used for predictive data quality management. These techniques can identify patterns that indicate potential data quality problems or issues.

Cloud-based Data Quality Tools

Cloud platforms offer scalable and flexible data quality tools that can handle large volumes of data. These tools are especially beneficial for large organizations dealing with big data and require robust, scalable solutions.

The integration of these tools and techniques into the data management strategy is critical. They not only ensure the quality of data but also enhance operational efficiency, decision-making, regulatory compliance, and overall business performance. As such, these data quality management tools and techniques are not just about maintaining data; they are about empowering the business with reliable, actionable insights.

What is good data quality?

Good data quality is a multifaceted concept, particularly vital in large organizations like Fortune 500 companies, where the accuracy and reliability of data can significantly impact business outcomes. Essentially, good data quality is characterized by several key attributes:

-

Accuracy: The data should accurately represent the real-world values or conditions it is meant to depict. It should be free from errors and precisely reflect the correct information.

-

Completeness: All necessary data should be present, and there should be no missing values or gaps in the data sets.

-

Consistency: The data should be consistent across different files, systems, and formats. There should be no contradictions or discrepancies when the same data is presented in different contexts.

-

Timeliness: Data should be up-to-date and available when needed. Outdated data can lead to misguided decisions and missed opportunities.

-

Relevance: The data should be pertinent and applicable to the specific needs and context of the business.

-

Reliability: The data should be trustworthy and sourced from credible sources, ensuring that it can be relied upon for making important business decisions.

Examples of Good Data Quality:

E-Commerce Product Data

Consider an e-commerce platform of a Fortune 500 company. Here, good data quality would mean that the product listings are accurate (correct description, price), complete (all necessary details like size, color, stock status are provided), consistent (the same product is described similarly across various categories or listings), timely (availability and prices are updated in real-time), relevant (product information meets the needs of the consumers), and reliable (sourced from trustworthy suppliers or manufacturers).

Learn more about how data quality can impact E-commerce performance.

Customer Data in a CRM System

In a customer relationship management (CRM) system, data quality dimensions would manifest as accurate customer details (correct names, contact information), complete records (including all relevant customer interactions, purchases, and preferences), data consistency across different departments (sales, marketing, customer service all have the same updated customer information), timeliness (customer data is regularly updated), relevance (data pertinent to understanding and serving the customer is collected), and reliability (the data is collected from credible and legitimate sources).

In both examples, maintaining high data quality ensures effective operations, enhances customer satisfaction, and supports informed decision-making, which are crucial for the success and competitiveness of a Fortune 500 company.

"Understand the benefits of web scraping for email marketing."

9 Popular Data Quality Characteristics and Dimensions

In the realm of data management, particularly for a Fortune 500 company, understanding the various characteristics and dimensions of data integrity is crucial. These dimensions help in evaluating, managing, and improving the quality of data. Here are nine widely recognized data quality characteristics and quality dimensions:

-

Accuracy: Refers to the correctness of the data. Accurate data correctly represents real-world values or conditions. For instance, in financial data, accuracy is paramount for ensuring correct transactions and reports.

-

Completeness: This dimension assesses whether all necessary data is present. Incomplete data can lead to erroneous conclusions, such as missing out on potential market opportunities due to incomplete customer data analysis.

-

Consistency: Consistency means that the data is uniform across various data sources and systems. For example, a customer's name should appear the same in marketing, sales, and customer service databases.

-

Timeliness: This involves the data being up-to-date and available when needed. Timeliness is critical for time-sensitive decisions, like stock market investments or supply chain management in a fast-paced retail environment.

-

Validity: Refers to data being in the correct format and within acceptable ranges. For example, a date field containing "13/32/2021" would violate validity rules.

-

Reliability: This dimension measures the trustworthiness of the data source and its collection process. Reliable data is crucial for high-stakes decisions, such as merger and acquisition strategies.

-

Uniqueness: Ensures no duplication of data. In customer databases, unique records prevent multiple marketing outreaches to the same individual, saving resources and preserving brand image.

-

Relevance: This is about the data being appropriate and applicable for the intended use. Relevance is key for targeted marketing campaigns, where irrelevant data can lead to misdirected efforts and resources.

-

Integrity: Refers to the data maintaining its structure and relationships across systems. Data integrity is crucial for accurate analytics, especially when combining data from disparate sources for big data analytics.

The importance of these dimensions has grown with the advent of big data. The sheer volume, variety, and velocity of data in large corporations necessitate robust data quality frameworks that encompass these dimensions.

Why Data Quality is Important to an Organization?

In the context of a Fortune 500 company, where data is a strategic asset, the importance of data quality cannot be overstated. High-quality data is fundamental to numerous aspects of an organization’s operations and strategic goals:

-

Informed Decision-Making: The cornerstone of any successful business strategy is decision-making based on solid, reliable data. High-quality data ensures that leaders and managers have accurate, timely, and relevant information to make informed decisions. Poor data integrity, conversely, can lead to misinformed decisions that may have adverse impacts on the business.

-

Enhanced Customer Experience: Quality data enables a company to better understand its customers’ needs, preferences, and behaviors. This insight is crucial for tailoring products and services, personalizing marketing efforts, and improving customer service, all of which are key to customer satisfaction and loyalty.

-

Operational Efficiency: Good data quality dimensions leads to streamlined operations. It reduces the time and resources spent on rectifying data errors, thereby improving overall efficiency. This is particularly crucial in large organizations where operational complexities are high.

-

Compliance and Risk Management: For Fortune 500 companies, adhering to regulatory compliance is vital. High-quality data ensures that the organization stays compliant with various legal and regulatory requirements. It also reduces the risks associated with incorrect data, such as financial penalties and reputational damage.

-

Competitive Advantage: In today's data-driven world, organizations that leverage high-quality data effectively gain a competitive edge. They are better positioned to identify market trends, innovate, and stay ahead of competitors.

-

Financial Performance: Accurate and reliable data impacts the bottom line. It enables better financial analysis, forecasting, and budgeting, leading to more strategic financial decisions and improved financial health of the organization.

-

Trust and Credibility: High data quality enhances the credibility of the organization both internally and externally. Stakeholders, including investors, customers, and partners, are more likely to trust and engage with an organization that demonstrates accuracy and reliability in its data practices.

Data quality is a critical component that underpins various facets of an organization's success. For a Fortune 500 company, where the scale and impact of decisions are significant, ensuring high data quality is not just a technical requirement but a strategic imperative.

Check out what Forbes has to say about data quality.

What's the difference between Data Quality Assurance and Data Quality Control

In the context of a Fortune 500 company, where the stakes of assessing data quality management are high, understanding the distinction between Data Quality Assurance (DQA) and Data Quality Control (DQC) is crucial. These two concepts, while interconnected, focus on different aspects of data quality management.

Data Quality Assurance (DQA)

Data Quality Assurance is a proactive process aimed at determining data quality and preventing defects in data. It involves establishing a set of practices and standards to ensure that data is of high quality from the outset. Key aspects of DQA include:

-

Setting Standards: Establishing benchmarks and guidelines for data quality that align with business objectives and requirements.

-

Process Implementation: Developing and implementing processes and procedures that ensure data quality throughout its lifecycle.

-

Training and Awareness: Educating and training employees on the importance of data quality and the practices to maintain it.

-

Continuous Improvement: Regularly reviewing and improving data quality processes based on new insights, technologies, and business needs.

In essence, DQA is about building a culture and environment where high data quality is the norm.

Data Quality Control (DQC)

It is a reactive process. It involves checking and validating data for quality after it has been created or processed. DQC focuses on:

-

Data Inspection: Examining data for errors, discrepancies, and inconsistencies using various tools and techniques.

-

Error Correction: Addressing and rectifying any issues identified during the inspection process.

-

Data Monitoring: Continuously monitoring data to ensure it maintains the set quality standards over time.

-

Reporting: Documenting issues, resolutions, and improvements in data quality for accountability and future reference.

DQC is about the data quality rules and identifying and rectifying any deviations from the established data quality standards.

Key Differences

-

Focus: DQA focuses on preventing data quality issues by establishing robust processes, whereas DQC is concerned with identifying and correcting data quality issues as they arise.

-

Approach: DQA is a proactive approach, embedding quality in the data management processes, while DQC is a reactive approach, dealing with issues after they have occurred.

-

Scope: DQA has a broader scope, involving organizational culture and training, while DQC is more technical, focusing on specific data sets and their quality.

-

Timing: DQA occurs before and during data creation or acquisition, while DQC typically happens after data is generated or processed.

In a Fortune 500 company, both are essential to emerging data quality challenges. DQA helps in building a foundation for high-quality data, and DQC ensures that the data remains of high quality throughout its lifecycle. Together, they form a comprehensive approach to managing and maintaining data quality.

The Consequences Of Bad Data Quality Control

In the high-stakes environment of a Fortune 500 company, where data-driven decisions are integral to business operations, the repercussions of poor data quality rules can be far-reaching. Understanding these consequences is crucial for appreciating the importance of robust data quality assessment and control measures.

-

Inaccurate Decision-Making: The most immediate impact of poor data consistency is on decision-making. Decisions based on inaccurate, incomplete, or outdated data can lead to strategic missteps, financial losses, and missed opportunities. In a large organization, these decisions can have cascading effects across various departments and functions.

-

Reduced Operational Efficiency: Poor data quality can significantly hamper operational efficiency. It can result in wasted time and resources as employees grapple with data errors, inconsistencies, and processing delays. This inefficiency can be particularly detrimental in areas like supply chain management, where precise data is critical.

-

Compliance Risks: For Fortune 500 companies, regulatory compliance is paramount. Poor data accuracy can lead to non-compliance with legal and regulatory standards, resulting in legal penalties, fines, and a damaged reputation. This is especially true in industries like finance and healthcare, where data accuracy is closely regulated.

-

Customer Dissatisfaction: Inaccurate or outdated customer data can lead to poor customer experiences. Errors in personal information, billing, or service delivery, stemming from bad data quality, can erode customer trust and loyalty, which is hard to regain.

-

Financial Losses: The financial implications of poor data accuracy are significant. It can lead to incorrect financial reporting, faulty budgeting, and poor financial planning. In the worst-case scenario, it can even lead to substantial financial losses due to errors going unnoticed.

-

Damaged Reputation: In today’s information age, a company’s reputation is closely tied to its data practices. Poor data integrity can lead to publicized errors, data breaches, or customer dissatisfaction, all of which can tarnish a company’s image.

-

Impaired Data-Driven Initiatives: Bad data quality hinders the effectiveness of data-driven projects and initiatives. Whether it's analytics, machine learning, or business intelligence, the output is only as good as the input data. Poor quality data can lead to misleading analytics results and misguided strategies.

Emphasizing Data Quality and Data Quality Control

To mitigate these consequences, it's essential to emphasize robust data integrity measures, including:

-

Regular Data Quality Assessments: Conduct frequent assessments to check the accuracy, completeness, and consistency of data.

-

Implementing Data Quality Tools: Utilizing advanced tools to measure data quality for real-time monitoring, cleansing, and validation of data.

-

Establishing Data Governance Frameworks: Creating comprehensive data governance policies to standardize data handling and ensure accountability.

The consequences of inconsistent data in a Fortune 500 company can be severe, affecting every facet of the business. By prioritizing data quality and implementing effective and quality control process and measures, organizations can avoid these pitfalls and harness the full potential of their data assets.

Leveraging DataHen's Expertise for Hassle-Free Email Scraping

In the dynamic world of email marketing, having access to up-to-date and relevant email lists is vital, but the process of acquiring them can be daunting. This is where DataHen comes into the picture—streamlining the web scraping process to bolster your email marketing campaigns without the need for in-house scraping tools or expertise.

Click the link here to learn more.